This will discuss the Generic Load-Balancer Application Recovery Kit (ARK) for SIOS Lifekeeper for Linux and specifically how to configure it on Google Cloud. SIOS ARKs are plug-ins to SIOS LifeKeeper products that add platform or application – awareness.

This blog shows how using a two-node NFS cluster and the NFS exports they provide can ultimately be accessed via the load-balancer.

SIOS has created this ARK to facilitate client redirection in SIOS LifeKeeper clusters running in GCP.

Since GCP does not support gratuitous ARP – a broadcast request for a router’s own IP address – clients cannot connect directly to traditional cluster virtual IP addresses. Instead, clients must connect to a load balancer and the load balancer redirects traffic to the active cluster node. GCP implements separate load-balancer solutions that operates on layer 4 TCP, layer 4 UDP or layer 7 HTTP/HTTPs, the Load-Balancer can be configured to have private or public frontend IP(s), a health-probe that can determine which node is active, a series of backend IP addresses (for each node in a cluster) and incoming/outgoing network traffic rules.

Traditionally the health probe would monitor the active port on an application and determine which node that application is active on, The SIOS generic load-balancer ARK is configured to have the active node listen on a user defined port. This port is then configured in the GCP Load-balancer as the Health Probe Port. This allows the active cluster node to respond to TCP health check probes, enabling automatic client redirection..

Installation and configuration in GCP is straightforward and detailed below:

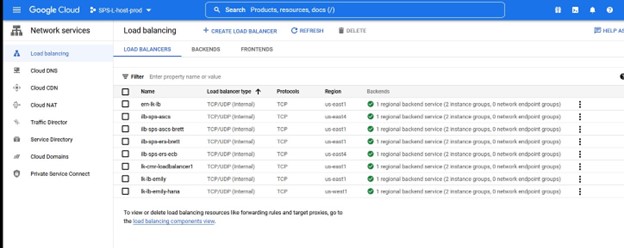

Within the GCP portal, select load-balancing

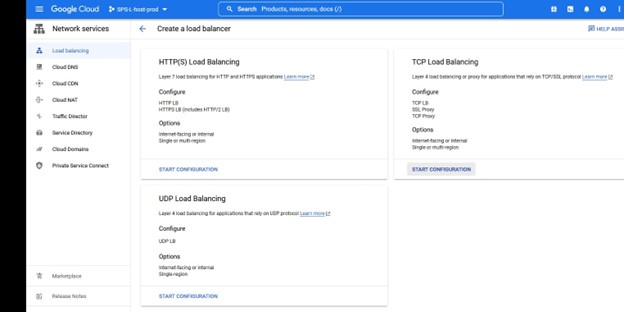

In this instance we want TCP Load Balancing

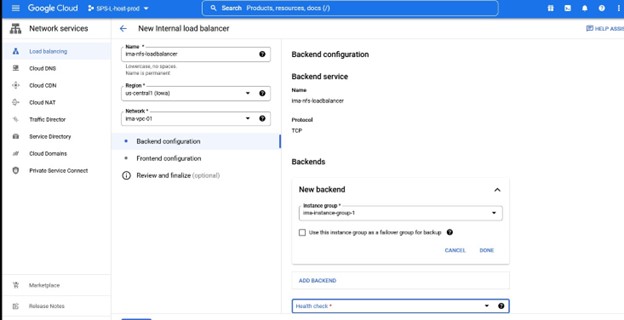

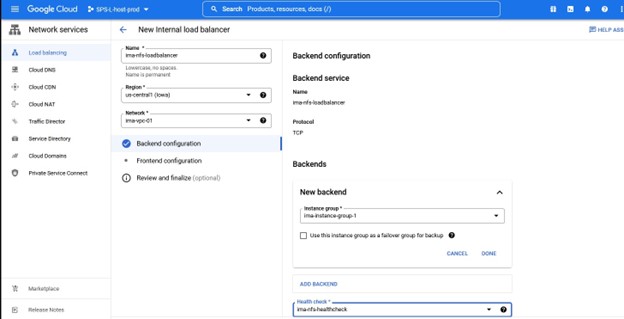

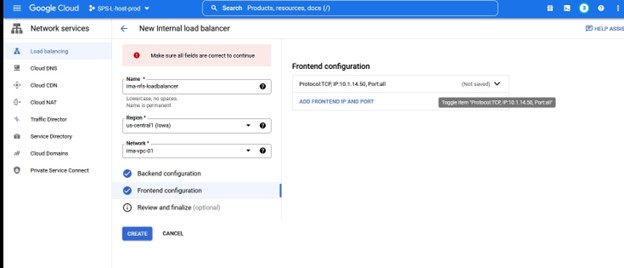

Create a load-balancer, you will select the resource group where you want this to be deployed as well as the name, I like to use a name that lines up with the cluster type that I’m using the load balancer with for example IMA-NFS-LB will sit in front of both IMA-NFS nodes.

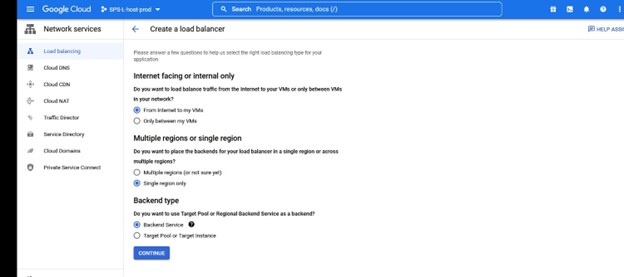

You can determine whether this will be an Internet facing or internal to your VPC. In this case I’m configuring an internal only Load-Balancer to front my NFS server for use only within my VPC.

Once you determine what the name, region etc will be then you will be asked to assign a Backend configuration, this will require an instance group that contains the HA nodes you will front-end.

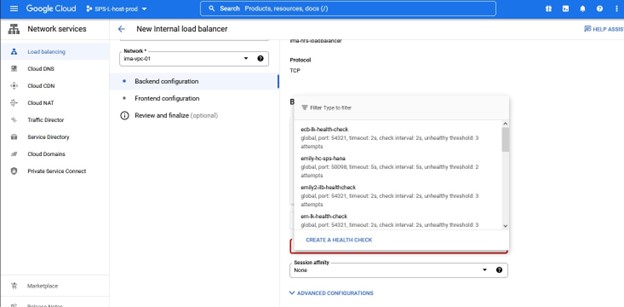

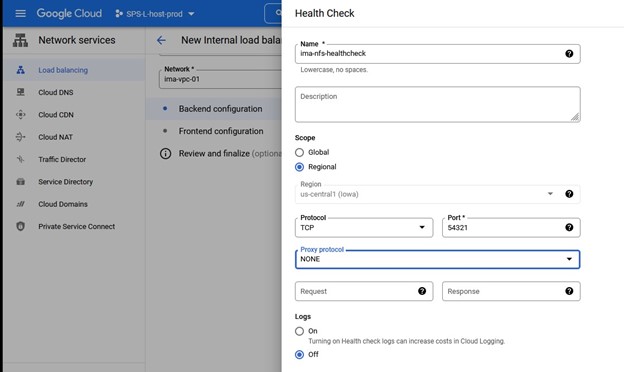

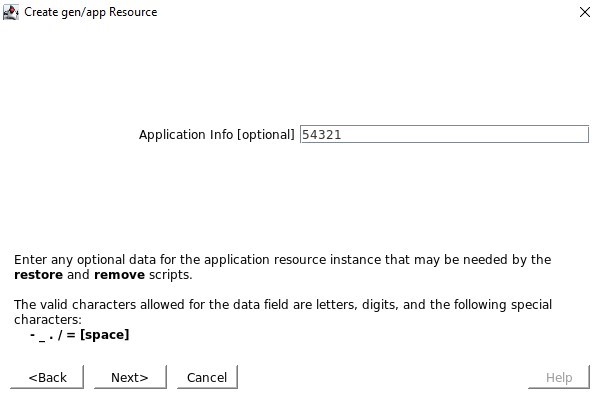

Once you have assigned an instance group, you will define a health check – this is the port that matches the port that you will use in the Lifekeeper Generic Load-balancer configuration in this case I’m using 54321.

Again, pay attention to the port number as this will be used with Lifekeeper.

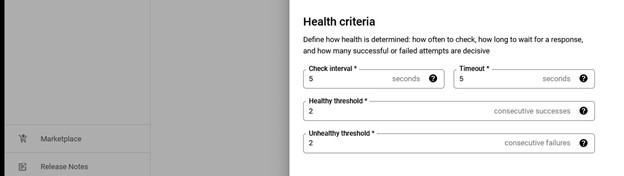

I just stuck with the default values for Health criteria.

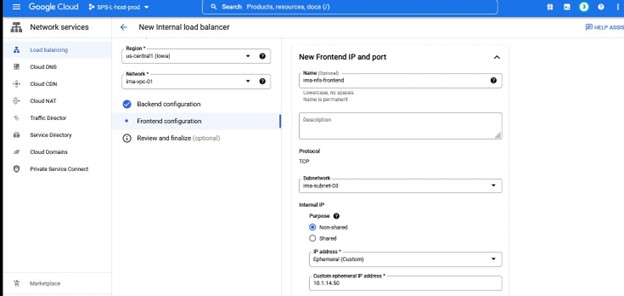

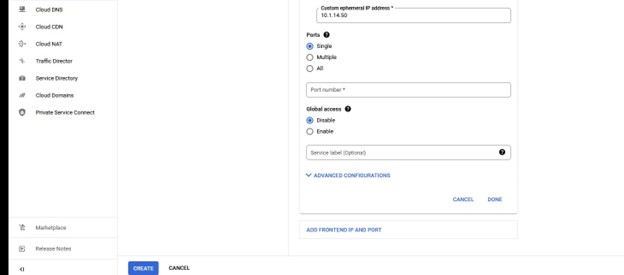

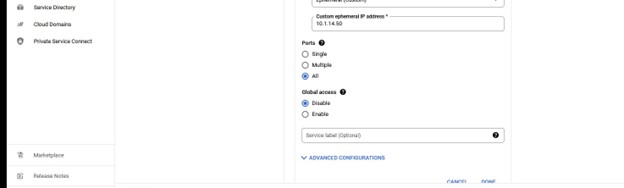

Once the backend configuration information and healthcheck is entered for the load-balancer you will need to define the frontend configuration. This consists of the subnet, region and IP you want to create for the load balancer.

You will configure your IP and this will match the Lifekeeper IP that you are protecting.

Once you are happy with the configuration, you can either review it or simply click create.

Once we select “create” then GCP will start the deployment of the Load Balancer, this can take several minutes and once complete then the configuration moves on to the SIOS Protection Suite.

Configuration with SIOS Protection Suite

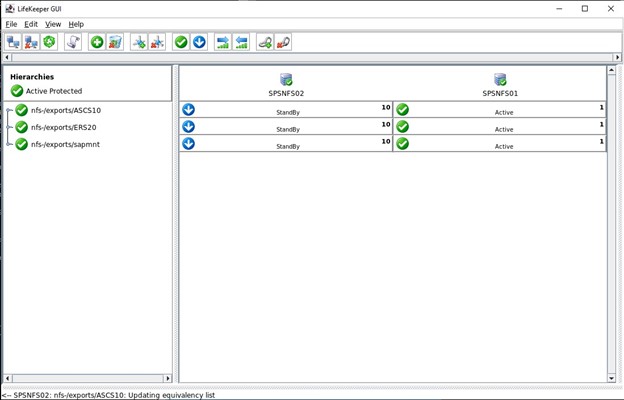

For this blog I have configured three NFS exports to be protected using SPS-L, the three exports are configured to use the same IP as the GCP load balancer’s frontend IP. I’m using Datakeeper to replicate the data stored on the exports.

First step is to obtain the scripts, the simplest way is to use wget but you can also download the entire package and upload the rpm directly to the nodes using winscp or a similar tool. You need to install the Hotfix on all nodes in the Lifekeeper cluster.

The entire recovery kit can be obtained here:

http://ftp.us.sios.com/pickup/LifeKeeper_Linux_Core_en_9.5.1/patches/Gen-LB-PL-7172-9.5.1

The parts can be found here with wget:

wget http://ftp.us.sios.com/pickup/Gen-LB-PL-7172-9.5.1/Gen-LB-readme.txt

Once downloaded, verify the MD5 sum against the value recorded on the FTP site.

Install the RPM as follows:

rpm -ivh steeleye-lkHOTFIX-Gen-LB-PL-7172-9.5.1-7154.x86_64.rpm

Check that the install was successful by running:

rpm -qa | grep steeleye-lkHOTFIX-Gen-LB-PL-7172

Should you need to remove the RPM for some reason, this can be done by running:

rpm -e steeleye-lkHOTFIX-Gen-LB-PL-7172-9.5.1-7154.x86_64

Below is the GUI showing my three NFS exports that I’ve already configured:

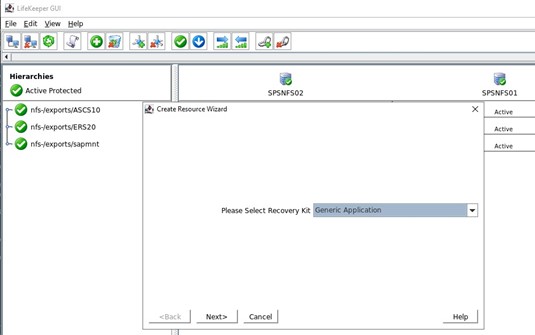

What we need to do within the SIOS Protection Suite is define the Load Balancer using the Hotfix scripts provided by SIOS.

First we create a new resource hierarchy, we select Generic Application from the drop down

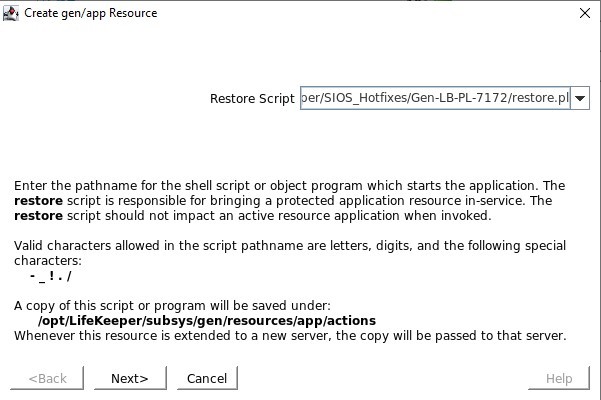

Define the restore.pl script located in /opt/Lifekeeper/SIOS_Hotfixes/Gen-LB-PL-7172/

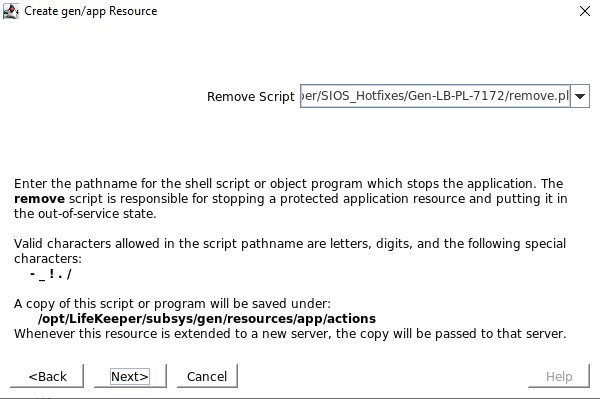

Define the remove.pl script located in /opt/Lifekeeper/SIOS_Hotfixes/Gen-LB-PL-7172/

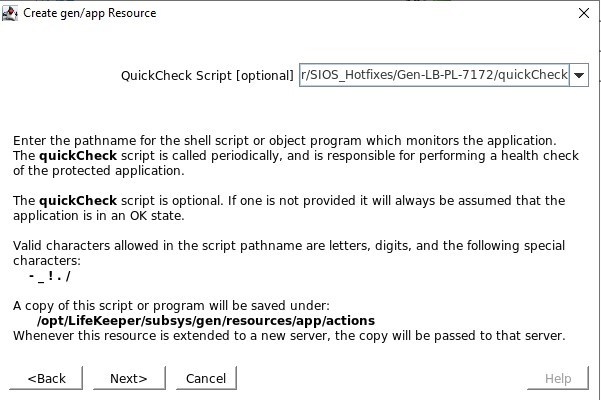

Define the quickCheck script located in /opt/Lifekeeper/SIOS_Hotfixes/Gen-LB-PL-7172/

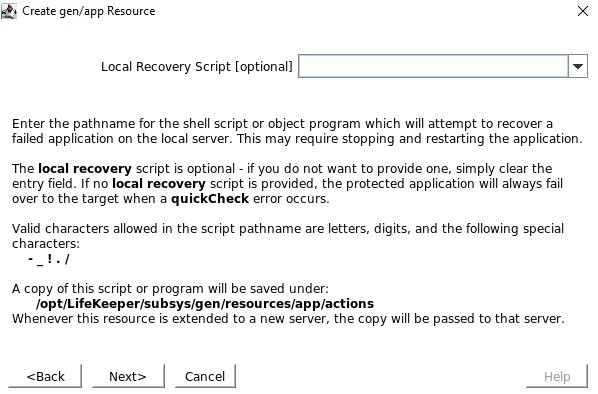

There is no local recovery script, so make sure you clear this input

When asked for Application Info, we want to enter the same port number as we configured in the Healthcheck port e.g. 54321

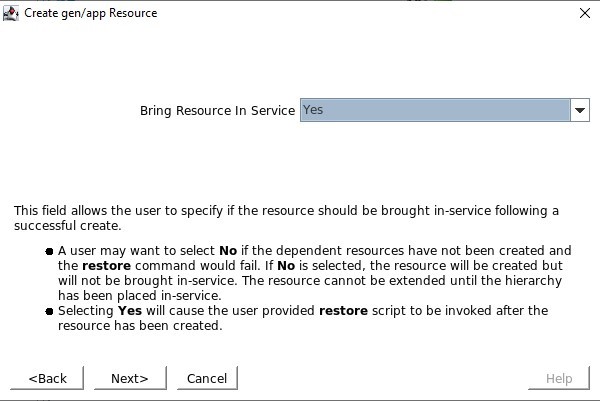

We will choose to bring the service into service once it’s created

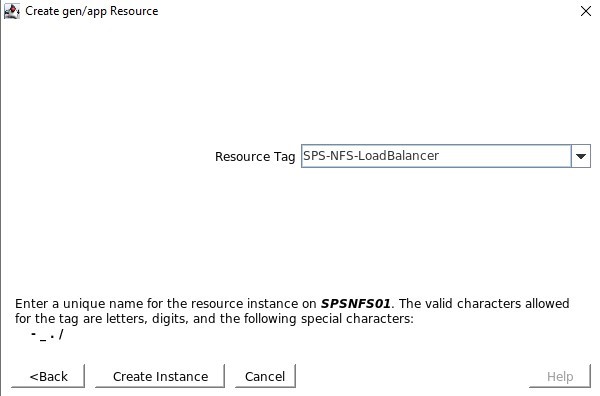

Resource Tag is the name that we will see displayed in the SPS-L GUI, I like to use something that makes it easy to identify

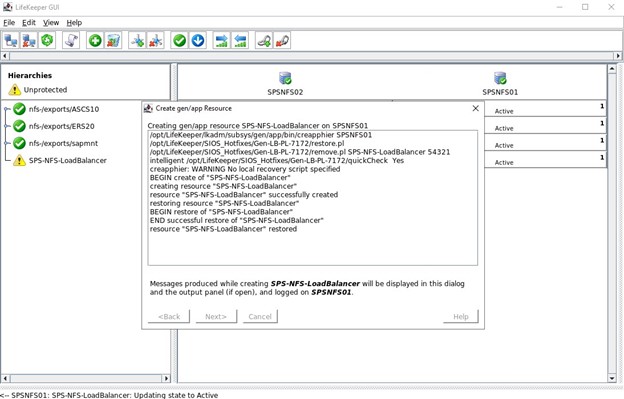

If everything is configured correctly you will see “END successful restore”, we can then extend this to the other node so that the resource can be hosted on either node.

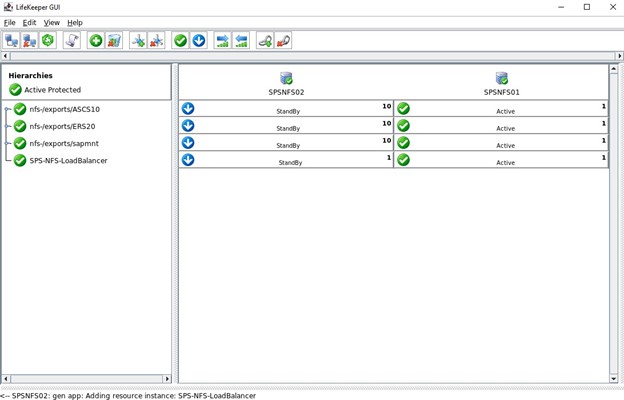

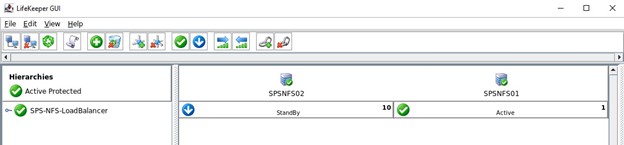

This shows the completed Load Balancer configuration following extension to both nodes

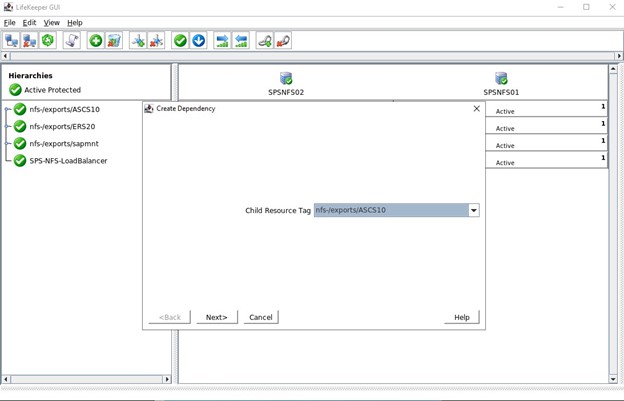

The last step for this cluster is to create child dependencies for the three NFS exports, this means that all the NFS exports complete with Datakeeper mirrors and IPs will rely on the Load Balancer. Should a serious issue occur on the active node then all these resources will fail-over to the other functioning node.

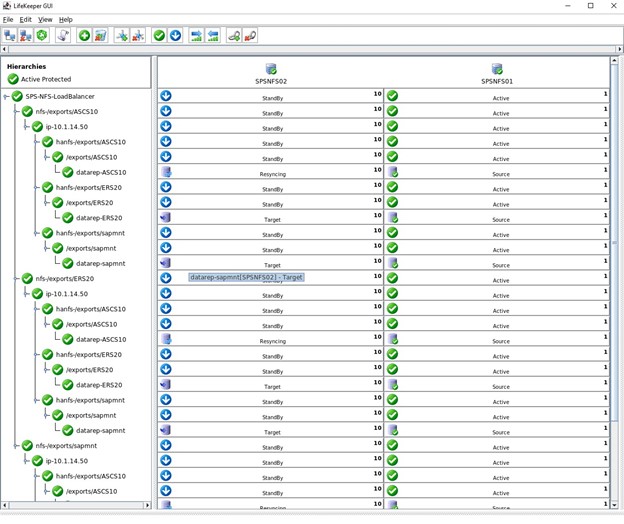

Above, the completed hierarchy in the Lifekeeper GUI. Below shows the expanded GUI view showing the NFS exports, IP, Filesystems and DataKeeper replicated volumes as children of the Load Balancer resource.

This is just one example of how you can use SIOS LifeKeeper in GCP to protect a simple NFS cluster. The same concept applies to any business critical application you need to protect. You simply need to leverage the Load Balancer ARK supplied by SIOS to allow the GCP Load Balancer (Internet or Internal) to determine which node is currently hosting the application.