Background

This post serves to document my findings in GCP in regards to write performance to a disk being replicated to GCP. But first, some background information. A customer expressed concern that DataKeeper was adding a tremendous amount of overhead to their write performance when testing with a synchronous mirror between Google Zones in the same region. The original test they performed was with the bitmap file on the C drive, which was a persistent SSD. In this configuration they were only pushing about 70 MBps. They tried relocating the bitmap to an extreme GCP disk, but the performance did not improve.

Moving the Bitmap to a Local SSD

I suggested that they move the bitmap to a local SSD, but they were hesitant because they believed the extreme disk they were using for the bitmap had latency and throughput that was as good or better than the local SSD, so they doubted it would make a difference. In addition, adding a local SSD is not a trivial task since it can only be added when the VM is originally provisioned.

Selecting the Instance Type

As I set out to complete my task, the first thing I discovered was that not every instance type supported a local SSD. For instance, the E2-Standard-8 does not support local SSD. For my first test I settled on a C2-Standard-8 instance type, which is considered “compute optimized”. I attached a 500 GB persistent SSD and started running some write performance tests and quickly discovered that I could only get the disk to write at about 140MBps rather than the max speed of 240MBps. The customer confirmed that they saw the same thing. It was perplexing, but we decided to move on and try a different instance type.

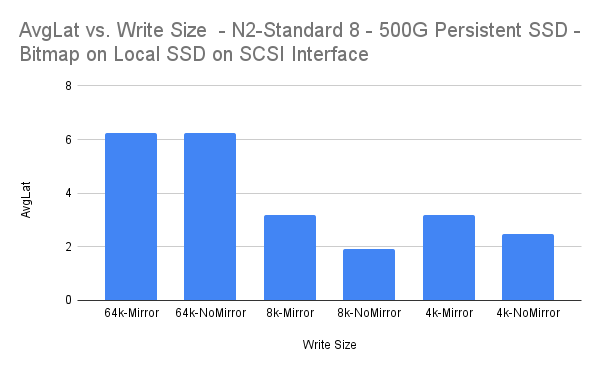

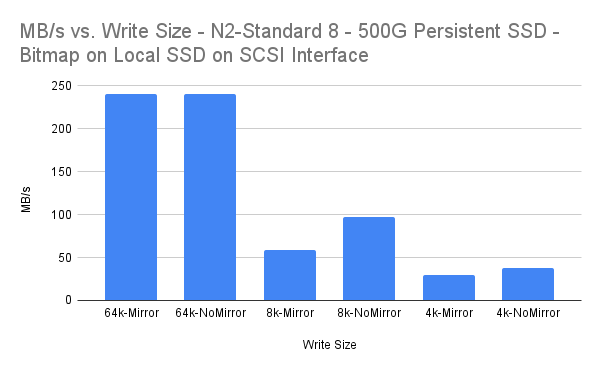

The second instance type we selected was an N2-Standard-8. With this instance type we were able to push the disk to its maximum throughput speed of 240 MBps when not replicating the disk. I moved the bitmap to the local SSD I had provisioned and repeated the same tests on a synchronous mirror (DataKeeper v8.8.2) and got the results shown below.

The Results

Diskspd test parameters

diskspd.exe -c96G -d10 -r -w100 -t8 -o3 -b64K -Sh -L D:data.dat

diskspd.exe -c96G -d10 -r -w100 -t8 -o3 -b8K -Sh -L D:data.dat

diskspd.exe -c96G -d10 -r -w100 -t8 -o3 -b4K -Sh -L D:data.dat

MBps

The Data

| Write Size | MB/s | MBps Percent Overhead |

| 64k-Mirror | 240.01 | 0.00% |

| 64k-NoMirror | 240.02 | |

| 8k-Mirror | 58.87 | 39.18% |

| 8k-NoMirror | 96.8 | |

| 4k-Mirror | 29.34 | 21.84% |

| 4k-NoMirror | 37.54 |

| Write Size | AvgLat | AvgLat Overhead |

| 64k-Mirror | 6.247 | -0.02% |

| 64k-NoMirror | 6.248 | |

| 8k-Mirror | 3.183 | 39.21% |

| 8k-NoMirror | 1.935 | |

| 4k-Mirror | 3.194 | 21.88% |

| 4k-NoMirror | 2.495 |

Conclusions

The 64k and 4k write sizes all incur overhead which could be considered as “acceptable” for synchronous replication. The 8k write size seems to incur a more significant amount of overhead, although the average latency of 3.183ms is still pretty low.

-Dave Bermingham, Director, Customer Success